GSGAN - Adversarial Learning for

Hierarchical Generation of 3D Gaussian Splats

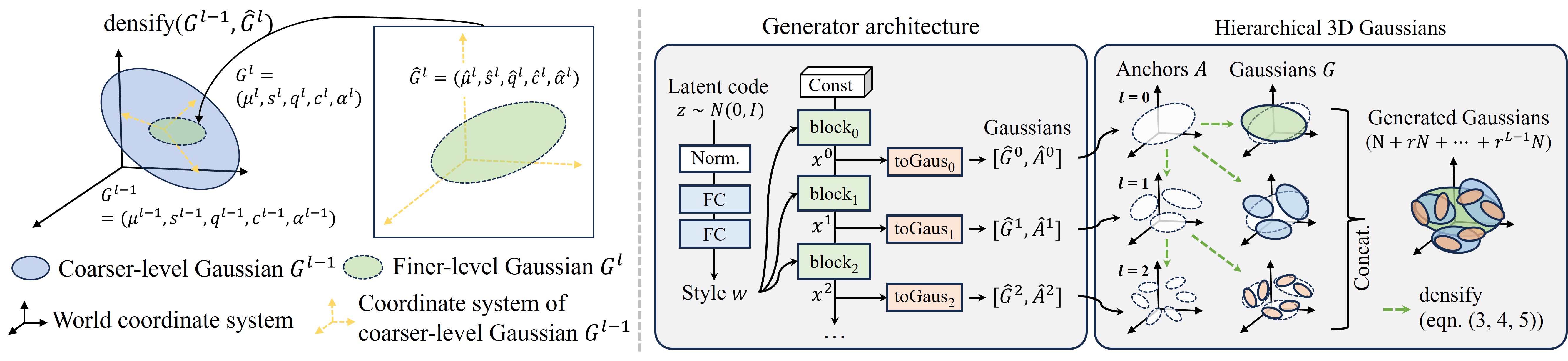

Most advances in 3D Generative Adversarial Networks (3D GANs) largely depend on ray casting-based volume rendering, which incurs demanding rendering costs. One promising alternative is rasterization-based 3D Gaussian Splatting (3D-GS), providing a much faster rendering speed and explicit 3D representation. In this paper, we exploit Gaussian as a 3D representation for 3D GANs by leveraging its efficient and explicit characteristics. However, in an adversarial framework, we observe that a na\"ive generator architecture suffers from training instability and lacks the capability to adjust the scale of Gaussians. This leads to model divergence and visual artifacts due to the absence of proper guidance for initialized positions of Gaussians and densification to manage their scales adaptively. To address these issues, we introduce a generator architecture with a hierarchical multi-scale Gaussian representation that effectively regularizes the position and scale of generated Gaussians. Specifically, we design a hierarchy of Gaussians where finer-level Gaussians are parameterized by their coarser-level counterparts; the position of finer-level Gaussians would be located near their coarser-level counterparts, and the scale would monotonically decrease as the level becomes finer, modeling both coarse and fine details of the 3D scene. Experimental results demonstrate that ours achieves a significantly faster rendering speed (×100) compared to state-of-the-art 3D consistent GANs with comparable 3D generation capability.

To handle with the absence of guidance on position and scale of Gaussians, we devise a method to regularize the Gaussian representation for 3D GANs, focusing particularly on the position and scale parameters. To this end, we propose a generator architecture with a hierarchical Gaussian representation. This hierarchical representation models the Gaussians of adjacent levels to be dependent, encouraging the generator to synthesize the 3D space in a coarse-to-fine manner. Specifically, we first introduce a locality constraint whereby the positions of fine-level Gaussians are located near their coarse-level counterpart Gaussians and are parameterized by them, thus reducing the possible positions of newly added Gaussians. Then, we design the scale of Gaussians to monotonically decrease as the level of Gaussians becomes finer, facilitating the generator's ability to model the scene in both coarse and fine details.

Naive model (FID=95.97, FFHQ-256)

Our model (FID=6.59, FFHQ-256)

With this proposed hierarchical Gaussian representation, we significantly enhance the generation capability of 3D GANs with Gaussian representation (FID=6.59) compared to naive model (FID=95.97), while achieving much faster rendering speed (~3ms per image) compared to NeRF-based 3D GANs.

| Methods | 3D consistency | FFHQ 256×256 | FFHQ 512×512 | AFHQ-Cat 256×256 | AFHQ-Cat 512×512 | Rendering time 256×256 (ms) | Rendering time 512×512 (ms) |

|---|---|---|---|---|---|---|---|

| EG3D | 4.80 | 4.70 | 3.41 | 2.72 | - | 15.5* | |

| GRAM | ✓ | 13.8 | - | 13.4 | - | - | - |

| GMPI | ✓ | 11.4 | 8.29 | - | 7.67 | - | - |

| EpiGRAF | ✓ | 9.71 | 9.92 | 6.93 | - | - | - |

| Voxgraf | ✓ | 9.60 | - | 9.60 | - | - | - |

| GRAM-HD | ✓ | 10.4 | - | - | 7.67 | 173.0 | 197.9 |

| Mimic3D | ✓ | 5.14 | 5.37 | 4.14 | 4.29 | 106.8 | 402.1 |

| Ours | ✓ | 6.59 | 5.60 | 3.43 | 3.79 | 2.7 | 3.0 |

For any further questions, please contact hse1032@gmail.com@inproceedings{hyungsgan,

title={GSGAN: Adversarial Learning for Hierarchical Generation of 3D Gaussian Splats},

author={Hyun, Sangeek and Heo, Jae-Pil},

booktitle={The Thirty-eighth Annual Conference on Neural Information Processing Systems}

}